#react useState json update

Explore tagged Tumblr posts

Text

Top 20 MERN Stack Interview Questions You Need to Prepare

The MERN stack (MongoDB, Express.js, React, Node.js) is a popular full-stack development technology. Here’s a quick guide to the key questions you may encounter in MERN stack interviews:

What is the MERN stack? It includes MongoDB (NoSQL database), Express.js (web framework), React (UI library), and Node.js (runtime for backend).

MongoDB vs SQL? MongoDB is document-oriented, flexible, and scalable, while SQL is relational and uses tables.

How does Express.js support web development? Express simplifies routing, request handling, and middleware management, speeding up app development.

Main features of React? React is component-based, uses virtual DOM, and supports one-way data binding for efficient rendering.

Why Node.js is popular? Node.js is fast, event-driven, and non-blocking, making it efficient for handling multiple requests concurrently.

MongoDB-Specific Questions

What does a MongoDB document represent? A document is a JSON-like object that holds data, allowing complex structures.

CRUD operations in MongoDB? CRUD stands for Create, Read, Update, and Delete—basic operations to manage data.

What are indexes in MongoDB? Indexes improve query performance by speeding up data retrieval.

How do you handle relationships in MongoDB? You can embed related data or store references to other documents (embedding vs. referencing).

Express.js-Specific Questions

What is middleware in Express.js? Middleware functions are used for routing, request handling, and managing response flow.

How do you handle routes in Express? Routes map URLs to controller functions to handle HTTP requests (GET, POST, etc.).

Security practices in Express? Implement CORS, input validation, and authentication to secure your application.

React-Specific Questions

Class vs Functional components? Class components use lifecycle methods; functional components use hooks like useState and useEffect.

How to manage state in React? State can be managed locally or using tools like Context API or Redux for global state.

What are React hooks? Hooks like useState and useEffect enable functional components to manage state and side effects without classes.

Node.js-Specific Questions

How does Node.js handle asynchronous programming? Using callbacks, promises, and async/await, Node handles async operations efficiently.

What is npm? npm is a package manager for Node.js, helping manage dependencies and libraries in your project.

Full-Stack Development Questions

How to implement authentication in MERN? Use JWTs, sessions, or OAuth for secure user authentication and authorization.

Deployment options for MERN apps? Popular options include Heroku, AWS, and Docker for hosting and scaling MERN applications.

Advanced Topics

How to optimize MERN app performance? Optimize client-side rendering, cache data, improve queries, and minimize app load time for better performance.

Conclusion:

Mastering the MERN stack opens numerous web development opportunities. By practicing these interview questions and applying your knowledge in real projects, you’ll boost both your technical skills and interview confidence. Keep learning and stay updated with the latest trends!

Get Read Full Article: https://blog.iihtsrt.com/mern-stack-interview-questions/

#MERN Stack Interview Questions#MERN Stack Developer Guide#Coding Interview Preparation#React Interview Questions

0 notes

Text

Python Full Stack Development Course AI + IoT Integrated | TechEntry

Join TechEntry's No.1 Python Full Stack Developer Course in 2025. Learn Full Stack Development with Python and become the best Full Stack Python Developer. Master Python, AI, IoT, and build advanced applications.

Why Settle for Just Full Stack Development? Become an AI Full Stack Engineer!

Transform your development expertise with our AI-focused Full Stack Python course, where you'll master the integration of advanced machine learning algorithms with Python’s robust web frameworks to build intelligent, scalable applications from frontend to backend.

Kickstart Your Development Journey!

Frontend Development

React: Build Dynamic, Modern Web Experiences:

What is Web?

Markup with HTML & JSX

Flexbox, Grid & Responsiveness

Bootstrap Layouts & Components

Frontend UI Framework

Core JavaScript & Object Orientation

Async JS promises, async/await

DOM & Events

Event Bubbling & Delegation

Ajax, Axios & fetch API

Functional React Components

Props & State Management

Dynamic Component Styling

Functions as Props

Hooks in React: useState, useEffect

Material UI

Custom Hooks

Supplement: Redux & Redux Toolkit

Version Control: Git & Github

Angular: Master a Full-Featured Framework:

What is Web?

Markup with HTML & Angular Templates

Flexbox, Grid & Responsiveness

Angular Material Layouts & Components

Core JavaScript & TypeScript

Asynchronous Programming Promises, Observables, and RxJS

DOM Manipulation & Events

Event Binding & Event Bubbling

HTTP Client, Ajax, Axios & Fetch API

Angular Components

Input & Output Property Binding

Dynamic Component Styling

Services & Dependency Injection

Angular Directives (Structural & Attribute)

Routing & Navigation

Reactive Forms & Template-driven Forms

State Management with NgRx

Custom Pipes & Directives

Version Control: Git & GitHub

Backend

Python

Python Overview and Setup

Networking and HTTP Basics

REST API Overview

Setting Up a Python Environment (Virtual Environments, Pip)

Introduction to Django Framework

Django Project Setup and Configuration

Creating Basic HTTP Servers with Django

Django URL Routing and Views

Handling HTTP Requests and Responses

JSON Parsing and Form Handling

Using Django Templates for Rendering HTML

CRUD API Creation and RESTful Services with Django REST Framework

Models and Database Integration

Understanding SQL and NoSQL Database Concepts

CRUD Operations with Django ORM

Database Connection Setup in Django

Querying and Data Handling with Django ORM

User Authentication Basics in Django

Implementing JSON Web Tokens (JWT) for Security

Role-Based Access Control

Advanced API Concepts: Pagination, Filtering, and Sorting

Caching Techniques for Faster Response

Rate Limiting and Security Practices

Deployment of Django Applications

Best Practices for Django Development

Database

MongoDB (NoSQL)

Introduction to NoSQL and MongoDB

Understanding Collections and Documents

Basic CRUD Operations in MongoDB

MongoDB Query Language (MQL) Basics

Inserting, Finding, Updating, and Deleting Documents

Using Filters and Projections in Queries

Understanding Data Types in MongoDB

Indexing Basics in MongoDB

Setting Up a Simple MongoDB Database (e.g., MongoDB Atlas)

Connecting to MongoDB from a Simple Application

Basic Data Entry and Querying with MongoDB Compass

Data Modeling in MongoDB: Embedding vs. Referencing

Overview of Aggregation Framework in MongoDB

SQL

Introduction to SQL (Structured Query Language)

Basic CRUD Operations: Create, Read, Update, Delete

Understanding Tables, Rows, and Columns

Primary Keys and Unique Constraints

Simple SQL Queries: SELECT, WHERE, and ORDER BY

Filtering Data with Conditions

Using Aggregate Functions: COUNT, SUM, AVG

Grouping Data with GROUP BY

Basic Joins: Combining Tables (INNER JOIN)

Data Types in SQL (e.g., INT, VARCHAR, DATE)

Setting Up a Simple SQL Database (e.g., SQLite or MySQL)

Connecting to a SQL Database from a Simple Application

Basic Data Entry and Querying with a GUI Tool

Data Validation Basics

Overview of Transactions and ACID Properties

AI and IoT

Introduction to AI Concepts

Getting Started with Python for AI

Machine Learning Essentials with scikit-learn

Introduction to Deep Learning with TensorFlow and PyTorch

Practical AI Project Ideas

Introduction to IoT Fundamentals

Building IoT Solutions with Python

IoT Communication Protocols

Building IoT Applications and Dashboards

IoT Security Basics

TechEntry Highlights

In-Office Experience: Engage in a collaborative in-office environment (on-site) for hands-on learning and networking.

Learn from Software Engineers: Gain insights from experienced engineers actively working in the industry today.

Career Guidance: Receive tailored advice on career paths and job opportunities in tech.

Industry Trends: Explore the latest software development trends to stay ahead in your field.

1-on-1 Mentorship: Access personalized mentorship for project feedback and ongoing professional development.

Hands-On Projects: Work on real-world projects to apply your skills and build your portfolio.

What You Gain:

A deep understanding of Front-end React.js and Back-end Python.

Practical skills in AI tools and IoT integration.

The confidence to work on real-time solutions and prepare for high-paying jobs.

The skills that are in demand across the tech industry, ensuring you're not just employable but sought-after.

Frequently Asked Questions

Q: What is Python, and why should I learn it?

A: Python is a versatile, high-level programming language known for its readability and ease of learning. It's widely used in web development, data science, artificial intelligence, and more.

Q: What are the prerequisites for learning Angular?

A: A basic understanding of HTML, CSS, and JavaScript is recommended before learning Angular.

Q: Do I need any prior programming experience to learn Python?

A: No, Python is beginner-friendly and designed to be accessible to those with no prior programming experience.

Q: What is React, and why use it?

A: React is a JavaScript library developed by Facebook for building user interfaces, particularly for single-page applications. It offers reusable components, fast performance, and one-way data flow.

Q: What is Django, and why should I learn it?

A: Django is a high-level web framework for building web applications quickly and efficiently using Python. It includes many built-in features for web development, such as authentication and an admin interface.

Q: What is the virtual DOM in React?

A: The virtual DOM represents the real DOM in memory. React uses it to detect changes and update the real DOM as needed, improving UI performance.

Q: Do I need to know Python before learning Django?

A: Yes, a basic understanding of Python is essential before diving into Django.

Q: What are props in React?

A: Props in React are objects used to pass information to a component, allowing data to be shared and utilized within the component.

Q: Why should I learn Angular?

A: Angular is a powerful framework for building dynamic, single-page web applications. It enhances your ability to create scalable and maintainable web applications and is highly valued in the job market.

Q: What is the difference between class-based components and functional components with hooks in React?

A: Class-based components maintain state via instances, while functional components use hooks to manage state, making them more efficient and popular.

For more, visit our website:

https://techentry.in/courses/python-fullstack-developer-course

0 notes

Text

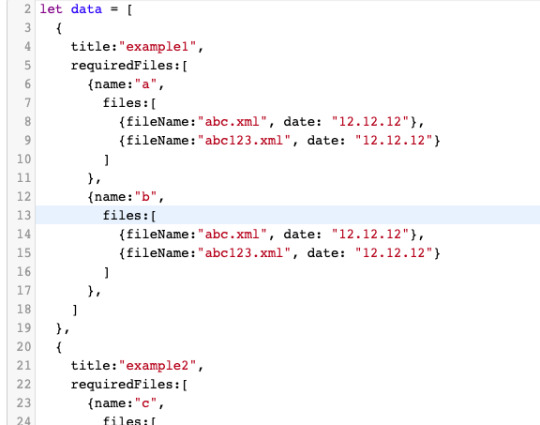

react useState iç içe json dosyasında güncelleme işlemi

şöyle bir json datası örneği düşünün. Biz burada files içine dosya ekleme çıkarma yapmak istiyoruz. Gördüğünüz gibi nested iç içe şekilde.

ben şöyle yaptım. Bunu bir list componenti düşünün 2. komponent grubuna dosya ekleyeceksek örneğin onun yolu bana lazım. Bunu bulmak içinde calenderID dediğim bu ilk jsondaki obje oluyor örneğin example 2 yazan obje. field lanı ise requiredFiles içerisindeki hangi obje olduğu. Bunlar lazım ki doğru dosya files güncelleyelim.

şöyle bir method yazdım

const uploadFileForCalenderItem = (calenderID, fileId, file) => { setCalenderItems(resultList => { const copy = [... resultList] const updateFiles = copy[calenderID].requiredFiles[fileId].files copy[calenderID].requiredFiles[fileId].files = [... updateFiles,file]

return copy }) }

0 notes

Link

The Jamstack way of thinking and building websites is becoming more and more popular. Have you already tried Gatsby, Nuxt, or Gridsome (to cite only a few)? Chances are that your first contact was a “Wow!” moment — so many things are automatically set up and ready to use. There are some challenges, though, one of which is search functionality. If you’re working on any sort of content-driven site, you’ll likely run into search and how to handle it. Can it be done without any external server-side technology? Search is not one of those things that come out of the box with Jamstack. Some extra decisions and implementation are required. Fortunately, we have a bunch of options that might be more or less adapted to a project. We could use Algolia’s powerful search-as-service API. It comes with a free plan that is restricted to non-commercial projects with a limited capacity. If we were to use WordPress with WPGraphQL as a data source, we could take advantage of WordPress native search functionality and Apollo Client. Raymond Camden recently explored a few Jamstack search options, including pointing a search form directly at Google. In this article, we will build a search index and add search functionality to a Gatsby website with Lunr, a lightweight JavaScript library providing an extensible and customizable search without the need for external, server-side services. We used it recently to add “Search by Tartan Name” to our Gatsby project tartanify.com. We absolutely wanted persistent search as-you-type functionality, which brought some extra challenges. But that’s what makes it interesting, right? I’ll discuss some of the difficulties we faced and how we dealt with them in the second half of this article.

Getting started

For the sake of simplicity, let’s use the official Gatsby blog starter. Using a generic starter lets us abstract many aspects of building a static website. If you’re following along, make sure to install and run it:

gatsby new gatsby-starter-blog https://github.com/gatsbyjs/gatsby-starter-blog cd gatsby-starter-blog gatsby develop

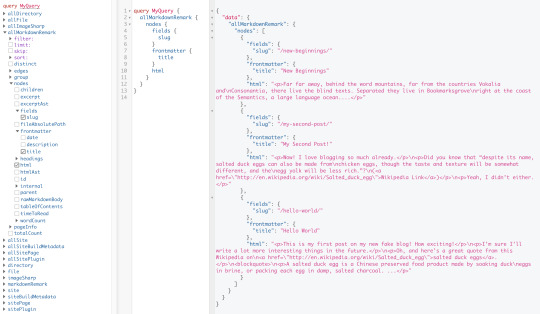

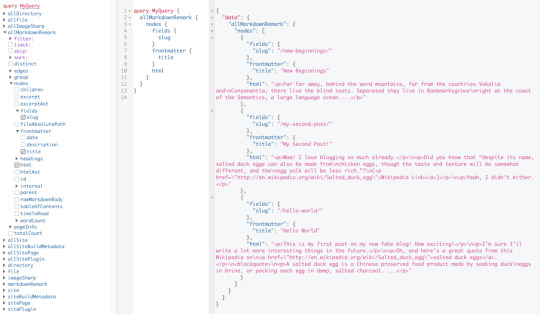

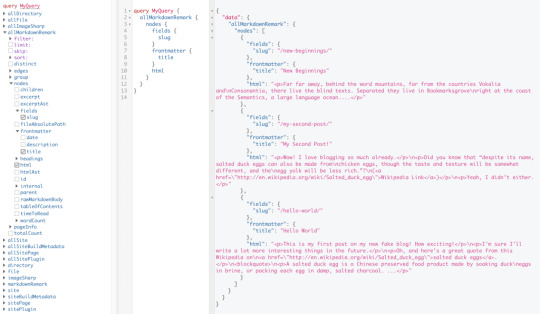

It’s a tiny blog with three posts we can view by opening up http://localhost:8000/___graphql in the browser.

Inverting index with Lunr.js 🙃

Lunr uses a record-level inverted index as its data structure. The inverted index stores the mapping for each word found within a website to its location (basically a set of page paths). It’s on us to decide which fields (e.g. title, content, description, etc.) provide the keys (words) for the index. For our blog example, I decided to include all titles and the content of each article. Dealing with titles is straightforward since they are composed uniquely of words. Indexing content is a little more complex. My first try was to use the rawMarkdownBody field. Unfortunately, rawMarkdownBody introduces some unwanted keys resulting from the markdown syntax.

I obtained a “clean” index using the html field in conjunction with the striptags package (which, as the name suggests, strips out the HTML tags). Before we get into the details, let’s look into the Lunr documentation. Here’s how we create and populate the Lunr index. We will use this snippet in a moment, specifically in our gatsby-node.js file.

const index = lunr(function () { this.ref('slug') this.field('title') this.field('content') for (const doc of documents) { this.add(doc) } })

documents is an array of objects, each with a slug, title and content property:

{ slug: '/post-slug/', title: 'Post Title', content: 'Post content with all HTML tags stripped out.' }

We will define a unique document key (the slug) and two fields (the title and content, or the key providers). Finally, we will add all of the documents, one by one. Let’s get started.

Creating an index in gatsby-node.js

Let’s start by installing the libraries that we are going to use.

yarn add lunr graphql-type-json striptags

Next, we need to edit the gatsby-node.js file. The code from this file runs once in the process of building a site, and our aim is to add index creation to the tasks that Gatsby executes on build. CreateResolvers is one of the Gatsby APIs controlling the GraphQL data layer. In this particular case, we will use it to create a new root field; Let’s call it LunrIndex. Gatsby’s internal data store and query capabilities are exposed to GraphQL field resolvers on context.nodeModel. With getAllNodes, we can get all nodes of a specified type:

/* gatsby-node.js */ const { GraphQLJSONObject } = require(`graphql-type-json`) const striptags = require(`striptags`) const lunr = require(`lunr`) exports.createResolvers = ({ cache, createResolvers }) => { createResolvers({ Query: { LunrIndex: { type: GraphQLJSONObject, resolve: (source, args, context, info) => { const blogNodes = context.nodeModel.getAllNodes({ type: `MarkdownRemark`, }) const type = info.schema.getType(`MarkdownRemark`) return createIndex(blogNodes, type, cache) }, }, }, }) }

Now let’s focus on the createIndex function. That’s where we will use the Lunr snippet we mentioned in the last section.

/* gatsby-node.js */ const createIndex = async (blogNodes, type, cache) => { const documents = [] // Iterate over all posts for (const node of blogNodes) { const html = await type.getFields().html.resolve(node) // Once html is resolved, add a slug-title-content object to the documents array documents.push({ slug: node.fields.slug, title: node.frontmatter.title, content: striptags(html), }) } const index = lunr(function() { this.ref(`slug`) this.field(`title`) this.field(`content`) for (const doc of documents) { this.add(doc) } }) return index.toJSON() }

Have you noticed that instead of accessing the HTML element directly with const html = node.html, we’re using an await expression? That’s because node.html isn’t available yet. The gatsby-transformer-remark plugin (used by our starter to parse Markdown files) does not generate HTML from markdown immediately when creating the MarkdownRemark nodes. Instead, html is generated lazily when the html field resolver is called in a query. The same actually applies to the excerpt that we will need in just a bit. Let’s look ahead and think about how we are going to display search results. Users expect to obtain a link to the matching post, with its title as the anchor text. Very likely, they wouldn’t mind a short excerpt as well. Lunr’s search returns an array of objects representing matching documents by the ref property (which is the unique document key slug in our example). This array does not contain the document title nor the content. Therefore, we need to store somewhere the post title and excerpt corresponding to each slug. We can do that within our LunrIndex as below:

/* gatsby-node.js */ const createIndex = async (blogNodes, type, cache) => { const documents = [] const store = {} for (const node of blogNodes) { const {slug} = node.fields const title = node.frontmatter.title const [html, excerpt] = await Promise.all([ type.getFields().html.resolve(node), type.getFields().excerpt.resolve(node, { pruneLength: 40 }), ]) documents.push({ // unchanged }) store[slug] = { title, excerpt, } } const index = lunr(function() { // unchanged }) return { index: index.toJSON(), store } }

Our search index changes only if one of the posts is modified or a new post is added. We don’t need to rebuild the index each time we run gatsby develop. To avoid unnecessary builds, let’s take advantage of the cache API:

/* gatsby-node.js */ const createIndex = async (blogNodes, type, cache) => { const cacheKey = `IndexLunr` const cached = await cache.get(cacheKey) if (cached) { return cached } // unchanged const json = { index: index.toJSON(), store } await cache.set(cacheKey, json) return json }

Enhancing pages with the search form component

We can now move on to the front end of our implementation. Let’s start by building a search form component.

touch src/components/search-form.js

I opt for a straightforward solution: an input of type="search", coupled with a label and accompanied by a submit button, all wrapped within a form tag with the search landmark role. We will add two event handlers, handleSubmit on form submit and handleChange on changes to the search input.

/* src/components/search-form.js */ import React, { useState, useRef } from "react" import { navigate } from "@reach/router" const SearchForm = ({ initialQuery = "" }) => { // Create a piece of state, and initialize it to initialQuery // query will hold the current value of the state, // and setQuery will let us change it const [query, setQuery] = useState(initialQuery) // We need to get reference to the search input element const inputEl = useRef(null) // On input change use the current value of the input field (e.target.value) // to update the state's query value const handleChange = e => { setQuery(e.target.value) } // When the form is submitted navigate to /search // with a query q paramenter equal to the value within the input search const handleSubmit = e => { e.preventDefault() // `inputEl.current` points to the mounted search input element const q = inputEl.current.value navigate(`/search?q=${q}`) } return ( <form role="search" onSubmit={handleSubmit}> <label htmlFor="search-input" style=> Search for: </label> <input ref={inputEl} id="search-input" type="search" value={query} placeholder="e.g. duck" onChange={handleChange} /> <button type="submit">Go</button> </form> ) } export default SearchForm

Have you noticed that we’re importing navigate from the @reach/router package? That is necessary since neither Gatsby’s <Link/> nor navigate provide in-route navigation with a query parameter. Instead, we can import @reach/router — there’s no need to install it since Gatsby already includes it — and use its navigate function. Now that we’ve built our component, let’s add it to our home page (as below) and 404 page.

/* src/pages/index.js */ // unchanged import SearchForm from "../components/search-form" const BlogIndex = ({ data, location }) => { // unchanged return ( <Layout location={location} title={siteTitle}> <SEO title="All posts" /> <Bio /> <SearchForm /> // unchanged

Search results page

Our SearchForm component navigates to the /search route when the form is submitted, but for the moment, there is nothing behing this URL. That means we need to add a new page:

touch src/pages/search.js

I proceeded by copying and adapting the content of the the index.js page. One of the essential modifications concerns the page query (see the very bottom of the file). We will replace allMarkdownRemark with the LunrIndex field.

/* src/pages/search.js */ import React from "react" import { Link, graphql } from "gatsby" import { Index } from "lunr" import Layout from "../components/layout" import SEO from "../components/seo" import SearchForm from "../components/search-form"

// We can access the results of the page GraphQL query via the data props const SearchPage = ({ data, location }) => { const siteTitle = data.site.siteMetadata.title // We can read what follows the ?q= here // URLSearchParams provides a native way to get URL params // location.search.slice(1) gets rid of the "?" const params = new URLSearchParams(location.search.slice(1)) const q = params.get("q") || ""

// LunrIndex is available via page query const { store } = data.LunrIndex // Lunr in action here const index = Index.load(data.LunrIndex.index) let results = [] try { // Search is a lunr method results = index.search(q).map(({ ref }) => { // Map search results to an array of {slug, title, excerpt} objects return { slug: ref, ...store[ref], } }) } catch (error) { console.log(error) } return ( // We will take care of this part in a moment ) } export default SearchPage export const pageQuery = graphql` query { site { siteMetadata { title } } LunrIndex } `

Now that we know how to retrieve the query value and the matching posts, let’s display the content of the page. Notice that on the search page we pass the query value to the <SearchForm /> component via the initialQuery props. When the user arrives to the search results page, their search query should remain in the input field.

return ( <Layout location={location} title={siteTitle}> <SEO title="Search results" /> {q ? <h1>Search results</h1> : <h1>What are you looking for?</h1>} <SearchForm initialQuery={q} /> {results.length ? ( results.map(result => { return ( <article key={result.slug}> <h2> <Link to={result.slug}> {result.title || result.slug} </Link> </h2> <p>{result.excerpt}</p> </article> ) }) ) : ( <p>Nothing found.</p> )} </Layout> )

You can find the complete code in this gatsby-starter-blog fork and the live demo deployed on Netlify.

Instant search widget

Finding the most “logical” and user-friendly way of implementing search may be a challenge in and of itself. Let’s now switch to the real-life example of tartanify.com — a Gatsby-powered website gathering 5,000+ tartan patterns. Since tartans are often associated with clans or organizations, the possibility to search a tartan by name seems to make sense. We built tartanify.com as a side project where we feel absolutely free to experiment with things. We didn’t want a classic search results page but an instant search “widget.” Often, a given search keyword corresponds with a number of results — for example, “Ramsay” comes in six variations. We imagined the search widget would be persistent, meaning it should stay in place when a user navigates from one matching tartan to another.

Let me show you how we made it work with Lunr. The first step of building the index is very similar to the gatsby-starter-blog example, only simpler:

/* gatsby-node.js */ exports.createResolvers = ({ cache, createResolvers }) => { createResolvers({ Query: { LunrIndex: { type: GraphQLJSONObject, resolve(source, args, context) { const siteNodes = context.nodeModel.getAllNodes({ type: `TartansCsv`, }) return createIndex(siteNodes, cache) }, }, }, }) } const createIndex = async (nodes, cache) => { const cacheKey = `LunrIndex` const cached = await cache.get(cacheKey) if (cached) { return cached } const store = {} const index = lunr(function() { this.ref(`slug`) this.field(`title`) for (node of nodes) { const { slug } = node.fields const doc = { slug, title: node.fields.Unique_Name, } store[slug] = { title: doc.title, } this.add(doc) } }) const json = { index: index.toJSON(), store } cache.set(cacheKey, json) return json }

We opted for instant search, which means that search is triggered by any change in the search input instead of a form submission.

/* src/components/searchwidget.js */ import React, { useState } from "react" import lunr, { Index } from "lunr" import { graphql, useStaticQuery } from "gatsby" import SearchResults from "./searchresults"

const SearchWidget = () => { const [value, setValue] = useState("") // results is now a state variable const [results, setResults] = useState([])

// Since it's not a page component, useStaticQuery for quering data // https://www.gatsbyjs.org/docs/use-static-query/ const { LunrIndex } = useStaticQuery(graphql` query { LunrIndex } `) const index = Index.load(LunrIndex.index) const { store } = LunrIndex const handleChange = e => { const query = e.target.value setValue(query) try { const search = index.search(query).map(({ ref }) => { return { slug: ref, ...store[ref], } }) setResults(search) } catch (error) { console.log(error) } } return ( <div className="search-wrapper"> // You can use a form tag as well, as long as we prevent the default submit behavior <div role="search"> <label htmlFor="search-input" className="visually-hidden"> Search Tartans by Name </label> <input id="search-input" type="search" value={value} onChange={handleChange} placeholder="Search Tartans by Name" /> </div> <SearchResults results={results} /> </div> ) } export default SearchWidget

The SearchResults are structured like this:

/* src/components/searchresults.js */ import React from "react" import { Link } from "gatsby" const SearchResults = ({ results }) => ( <div> {results.length ? ( <> <h2>{results.length} tartan(s) matched your query</h2> <ul> {results.map(result => ( <li key={result.slug}> <Link to={`/tartan/${result.slug}`}>{result.title}</Link> </li> ))} </ul> </> ) : ( <p>Sorry, no matches found.</p> )} </div> ) export default SearchResults

Making it persistent

Where should we use this component? We could add it to the Layout component. The problem is that our search form will get unmounted on page changes that way. If a user wants to browser all tartans associated with the “Ramsay” clan, they will have to retype their query several times. That’s not ideal. Thomas Weibenfalk has written a great article on keeping state between pages with local state in Gatsby.js. We will use the same technique, where the wrapPageElement browser API sets persistent UI elements around pages. Let’s add the following code to the gatsby-browser.js. You might need to add this file to the root of your project.

/* gatsby-browser.js */ import React from "react" import SearchWrapper from "./src/components/searchwrapper" export const wrapPageElement = ({ element, props }) => ( <SearchWrapper {...props}>{element}</SearchWrapper> )

Now let’s add a new component file:

touch src/components/searchwrapper.js

Instead of adding SearchWidget component to the Layout, we will add it to the SearchWrapper and the magic happens. ✨

/* src/components/searchwrapper.js */ import React from "react" import SearchWidget from "./searchwidget"

const SearchWrapper = ({ children }) => ( <> {children} <SearchWidget /> </> ) export default SearchWrapper

Creating a custom search query

At this point, I started to try different keywords but very quickly realized that Lunr’s default search query might not be the best solution when used for instant search. Why? Imagine that we are looking for tartans associated with the name MacCallum. While typing “MacCallum” letter-by-letter, this is the evolution of the results:

m – 2 matches (Lyon, Jeffrey M, Lyon, Jeffrey M (Hunting))

ma – no matches

mac – 1 match (Brighton Mac Dermotte)

macc – no matches

macca – no matches

maccal – 1 match (MacCall)

maccall – 1 match (MacCall)

maccallu – no matches

maccallum – 3 matches (MacCallum, MacCallum #2, MacCallum of Berwick)

Users will probably type the full name and hit the button if we make a button available. But with instant search, a user is likely to abandon early because they may expect that the results can only narrow down letters are added to the keyword query. That’s not the only problem. Here’s what we get with “Callum”:

c – 3 unrelated matches

ca – no matches

cal – no matches

call – no matches

callu – no matches

callum – one match

You can see the trouble if someone gives up halfway into typing the full query. Fortunately, Lunr supports more complex queries, including fuzzy matches, wildcards and boolean logic (e.g. AND, OR, NOT) for multiple terms. All of these are available either via a special query syntax, for example:

index.search("+*callum mac*")

We could also reach for the index query method to handle it programatically. The first solution is not satisfying since it requires more effort from the user. I used the index.query method instead:

/* src/components/searchwidget.js */ const search = index .query(function(q) { // full term matching q.term(el) // OR (default) // trailing or leading wildcard q.term(el, { wildcard: lunr.Query.wildcard.LEADING | lunr.Query.wildcard.TRAILING, }) }) .map(({ ref }) => { return { slug: ref, ...store[ref], } })

Why use full term matching with wildcard matching? That’s necessary for all keywords that “benefit” from the stemming process. For example, the stem of “different” is “differ.” As a consequence, queries with wildcards — such as differe*, differen* or different* — all result in no matches, while the full term queries differe, differen and different return matches. Fuzzy matches can be used as well. In our case, they are allowed uniquely for terms of five or more characters:

q.term(el, { editDistance: el.length > 5 ? 1 : 0 }) q.term(el, { wildcard: lunr.Query.wildcard.LEADING | lunr.Query.wildcard.TRAILING, })

The handleChange function also “cleans up” user inputs and ignores single-character terms:

/* src/components/searchwidget.js */ const handleChange = e => { const query = e.target.value || "" setValue(query) if (!query.length) { setResults([]) } const keywords = query .trim() // remove trailing and leading spaces .replace(/\*/g, "") // remove user's wildcards .toLowerCase() .split(/\s+/) // split by whitespaces // do nothing if the last typed keyword is shorter than 2 if (keywords[keywords.length - 1].length < 2) { return } try { const search = index .query(function(q) { keywords // filter out keywords shorter than 2 .filter(el => el.length > 1) // loop over keywords .forEach(el => { q.term(el, { editDistance: el.length > 5 ? 1 : 0 }) q.term(el, { wildcard: lunr.Query.wildcard.LEADING | lunr.Query.wildcard.TRAILING, }) }) }) .map(({ ref }) => { return { slug: ref, ...store[ref], } }) setResults(search) } catch (error) { console.log(error) } }

Let’s check it in action:

m – pending

ma – 861 matches

mac – 600 matches

macc – 35 matches

macca – 12 matches

maccal – 9 matches

maccall – 9 matches

maccallu – 3 matches

maccallum – 3 matches

Searching for “Callum” works as well, resulting in four matches: Callum, MacCallum, MacCallum #2, and MacCallum of Berwick. There is one more problem, though: multi-terms queries. Say, you’re looking for “Loch Ness.” There are two tartans associated with that term, but with the default OR logic, you get a grand total of 96 results. (There are plenty of other lakes in Scotland.) I wound up deciding that an AND search would work better for this project. Unfortunately, Lunr does not support nested queries, and what we actually need is (keyword1 OR *keyword*) AND (keyword2 OR *keyword2*). To overcome this, I ended up moving the terms loop outside the query method and intersecting the results per term. (By intersecting, I mean finding all slugs that appear in all of the per-single-keyword results.)

/* src/components/searchwidget.js */ try { // andSearch stores the intersection of all per-term results let andSearch = [] keywords .filter(el => el.length > 1) // loop over keywords .forEach((el, i) => { // per-single-keyword results const keywordSearch = index .query(function(q) { q.term(el, { editDistance: el.length > 5 ? 1 : 0 }) q.term(el, { wildcard: lunr.Query.wildcard.LEADING | lunr.Query.wildcard.TRAILING, }) }) .map(({ ref }) => { return { slug: ref, ...store[ref], } }) // intersect current keywordSearch with andSearch andSearch = i > 0 ? andSearch.filter(x => keywordSearch.some(el => el.slug === x.slug)) : keywordSearch }) setResults(andSearch) } catch (error) { console.log(error) }

The source code for tartanify.com is published on GitHub. You can see the complete implementation of the Lunr search there.

Final thoughts

Search is often a non-negotiable feature for finding content on a site. How important the search functionality actually is may vary from one project to another. Nevertheless, there is no reason to abandon it under the pretext that it does not tally with the static character of Jamstack websites. There are many possibilities. We’ve just discussed one of them. And, paradoxically in this specific example, the result was a better all-around user experience, thanks to the fact that implementing search was not an obvious task but instead required a lot of deliberation. We may not have been able to say the same with an over-the-counter solution.

0 notes

Text

How to Add Lunr Search to your Gatsby Website

The Jamstack way of thinking and building websites is becoming more and more popular.

Have you already tried Gatsby, Nuxt, or Gridsome (to cite only a few)? Chances are that your first contact was a “Wow!” moment — so many things are automatically set up and ready to use.

There are some challenges, though, one of which is search functionality. If you’re working on any sort of content-driven site, you’ll likely run into search and how to handle it. Can it be done without any external server-side technology?

Search is not one of those things that come out of the box with Jamstack. Some extra decisions and implementation are required.

Fortunately, we have a bunch of options that might be more or less adapted to a project. We could use Algolia’s powerful search-as-service API. It comes with a free plan that is restricted to non-commercial projects with a limited capacity. If we were to use WordPress with WPGraphQL as a data source, we could take advantage of WordPress native search functionality and Apollo Client. Raymond Camden recently explored a few Jamstack search options, including pointing a search form directly at Google.

In this article, we will build a search index and add search functionality to a Gatsby website with Lunr, a lightweight JavaScript library providing an extensible and customizable search without the need for external, server-side services. We used it recently to add “Search by Tartan Name” to our Gatsby project tartanify.com. We absolutely wanted persistent search as-you-type functionality, which brought some extra challenges. But that’s what makes it interesting, right? I’ll discuss some of the difficulties we faced and how we dealt with them in the second half of this article.

Getting started

For the sake of simplicity, let’s use the official Gatsby blog starter. Using a generic starter lets us abstract many aspects of building a static website. If you’re following along, make sure to install and run it:

gatsby new gatsby-starter-blog https://github.com/gatsbyjs/gatsby-starter-blog cd gatsby-starter-blog gatsby develop

It’s a tiny blog with three posts we can view by opening up http://localhost:8000/___graphql in the browser.

Inverting index with Lunr.js 🙃

Lunr uses a record-level inverted index as its data structure. The inverted index stores the mapping for each word found within a website to its location (basically a set of page paths). It’s on us to decide which fields (e.g. title, content, description, etc.) provide the keys (words) for the index.

For our blog example, I decided to include all titles and the content of each article. Dealing with titles is straightforward since they are composed uniquely of words. Indexing content is a little more complex. My first try was to use the rawMarkdownBody field. Unfortunately, rawMarkdownBody introduces some unwanted keys resulting from the markdown syntax.

I obtained a “clean” index using the html field in conjunction with the striptags package (which, as the name suggests, strips out the HTML tags). Before we get into the details, let’s look into the Lunr documentation.

Here’s how we create and populate the Lunr index. We will use this snippet in a moment, specifically in our gatsby-node.js file.

const index = lunr(function () { this.ref('slug') this.field('title') this.field('content') for (const doc of documents) { this.add(doc) } })

documents is an array of objects, each with a slug, title and content property:

{ slug: '/post-slug/', title: 'Post Title', content: 'Post content with all HTML tags stripped out.' }

We will define a unique document key (the slug) and two fields (the title and content, or the key providers). Finally, we will add all of the documents, one by one.

Let’s get started.

Creating an index in gatsby-node.js

Let’s start by installing the libraries that we are going to use.

yarn add lunr graphql-type-json striptags

Next, we need to edit the gatsby-node.js file. The code from this file runs once in the process of building a site, and our aim is to add index creation to the tasks that Gatsby executes on build.

CreateResolvers is one of the Gatsby APIs controlling the GraphQL data layer. In this particular case, we will use it to create a new root field; Let’s call it LunrIndex.

Gatsby’s internal data store and query capabilities are exposed to GraphQL field resolvers on context.nodeModel. With getAllNodes, we can get all nodes of a specified type:

/* gatsby-node.js */ const { GraphQLJSONObject } = require(`graphql-type-json`) const striptags = require(`striptags`) const lunr = require(`lunr`) exports.createResolvers = ({ cache, createResolvers }) => { createResolvers({ Query: { LunrIndex: { type: GraphQLJSONObject, resolve: (source, args, context, info) => { const blogNodes = context.nodeModel.getAllNodes({ type: `MarkdownRemark`, }) const type = info.schema.getType(`MarkdownRemark`) return createIndex(blogNodes, type, cache) }, }, }, }) }

Now let’s focus on the createIndex function. That’s where we will use the Lunr snippet we mentioned in the last section.

/* gatsby-node.js */ const createIndex = async (blogNodes, type, cache) => { const documents = [] // Iterate over all posts for (const node of blogNodes) { const html = await type.getFields().html.resolve(node) // Once html is resolved, add a slug-title-content object to the documents array documents.push({ slug: node.fields.slug, title: node.frontmatter.title, content: striptags(html), }) } const index = lunr(function() { this.ref(`slug`) this.field(`title`) this.field(`content`) for (const doc of documents) { this.add(doc) } }) return index.toJSON() }

Have you noticed that instead of accessing the HTML element directly with const html = node.html, we’re using an await expression? That’s because node.html isn’t available yet. The gatsby-transformer-remark plugin (used by our starter to parse Markdown files) does not generate HTML from markdown immediately when creating the MarkdownRemark nodes. Instead, html is generated lazily when the html field resolver is called in a query. The same actually applies to the excerpt that we will need in just a bit.

Let’s look ahead and think about how we are going to display search results. Users expect to obtain a link to the matching post, with its title as the anchor text. Very likely, they wouldn’t mind a short excerpt as well.

Lunr’s search returns an array of objects representing matching documents by the ref property (which is the unique document key slug in our example). This array does not contain the document title nor the content. Therefore, we need to store somewhere the post title and excerpt corresponding to each slug. We can do that within our LunrIndex as below:

/* gatsby-node.js */ const createIndex = async (blogNodes, type, cache) => { const documents = [] const store = {} for (const node of blogNodes) { const {slug} = node.fields const title = node.frontmatter.title const [html, excerpt] = await Promise.all([ type.getFields().html.resolve(node), type.getFields().excerpt.resolve(node, { pruneLength: 40 }), ]) documents.push({ // unchanged }) store[slug] = { title, excerpt, } } const index = lunr(function() { // unchanged }) return { index: index.toJSON(), store } }

Our search index changes only if one of the posts is modified or a new post is added. We don’t need to rebuild the index each time we run gatsby develop. To avoid unnecessary builds, let’s take advantage of the cache API:

/* gatsby-node.js */ const createIndex = async (blogNodes, type, cache) => { const cacheKey = `IndexLunr` const cached = await cache.get(cacheKey) if (cached) { return cached } // unchanged const json = { index: index.toJSON(), store } await cache.set(cacheKey, json) return json }

Enhancing pages with the search form component

We can now move on to the front end of our implementation. Let’s start by building a search form component.

touch src/components/search-form.js

I opt for a straightforward solution: an input of type="search", coupled with a label and accompanied by a submit button, all wrapped within a form tag with the search landmark role.

We will add two event handlers, handleSubmit on form submit and handleChange on changes to the search input.

/* src/components/search-form.js */ import React, { useState, useRef } from "react" import { navigate } from "@reach/router" const SearchForm = ({ initialQuery = "" }) => { // Create a piece of state, and initialize it to initialQuery // query will hold the current value of the state, // and setQuery will let us change it const [query, setQuery] = useState(initialQuery) // We need to get reference to the search input element const inputEl = useRef(null) // On input change use the current value of the input field (e.target.value) // to update the state's query value const handleChange = e => { setQuery(e.target.value) } // When the form is submitted navigate to /search // with a query q paramenter equal to the value within the input search const handleSubmit = e => { e.preventDefault() // `inputEl.current` points to the mounted search input element const q = inputEl.current.value navigate(`/search?q=${q}`) } return ( <form role="search" onSubmit={handleSubmit}> <label htmlFor="search-input" style=> Search for: </label> <input ref={inputEl} id="search-input" type="search" value={query} placeholder="e.g. duck" onChange={handleChange} /> <button type="submit">Go</button> </form> ) } export default SearchForm

Have you noticed that we’re importing navigate from the @reach/router package? That is necessary since neither Gatsby’s <Link/> nor navigate provide in-route navigation with a query parameter. Instead, we can import @reach/router — there’s no need to install it since Gatsby already includes it — and use its navigate function.

Now that we’ve built our component, let’s add it to our home page (as below) and 404 page.

/* src/pages/index.js */ // unchanged import SearchForm from "../components/search-form" const BlogIndex = ({ data, location }) => { // unchanged return ( <Layout location={location} title={siteTitle}> <SEO title="All posts" /> <Bio /> <SearchForm /> // unchanged

Search results page

Our SearchForm component navigates to the /search route when the form is submitted, but for the moment, there is nothing behing this URL. That means we need to add a new page:

touch src/pages/search.js

I proceeded by copying and adapting the content of the the index.js page. One of the essential modifications concerns the page query (see the very bottom of the file). We will replace allMarkdownRemark with the LunrIndex field.

/* src/pages/search.js */ import React from "react" import { Link, graphql } from "gatsby" import { Index } from "lunr" import Layout from "../components/layout" import SEO from "../components/seo" import SearchForm from "../components/search-form"

// We can access the results of the page GraphQL query via the data props const SearchPage = ({ data, location }) => { const siteTitle = data.site.siteMetadata.title // We can read what follows the ?q= here // URLSearchParams provides a native way to get URL params // location.search.slice(1) gets rid of the "?" const params = new URLSearchParams(location.search.slice(1)) const q = params.get("q") || ""

// LunrIndex is available via page query const { store } = data.LunrIndex // Lunr in action here const index = Index.load(data.LunrIndex.index) let results = [] try { // Search is a lunr method results = index.search(q).map(({ ref }) => { // Map search results to an array of {slug, title, excerpt} objects return { slug: ref, ...store[ref], } }) } catch (error) { console.log(error) } return ( // We will take care of this part in a moment ) } export default SearchPage export const pageQuery = graphql` query { site { siteMetadata { title } } LunrIndex } `

Now that we know how to retrieve the query value and the matching posts, let’s display the content of the page. Notice that on the search page we pass the query value to the <SearchForm /> component via the initialQuery props. When the user arrives to the search results page, their search query should remain in the input field.

return ( <Layout location={location} title={siteTitle}> <SEO title="Search results" /> {q ? <h1>Search results</h1> : <h1>What are you looking for?</h1>} <SearchForm initialQuery={q} /> {results.length ? ( results.map(result => { return ( <article key={result.slug}> <h2> <Link to={result.slug}> {result.title || result.slug} </Link> </h2> <p>{result.excerpt}</p> </article> ) }) ) : ( <p>Nothing found.</p> )} </Layout> )

You can find the complete code in this gatsby-starter-blog fork and the live demo deployed on Netlify.

Instant search widget

Finding the most “logical” and user-friendly way of implementing search may be a challenge in and of itself. Let’s now switch to the real-life example of tartanify.com — a Gatsby-powered website gathering 5,000+ tartan patterns. Since tartans are often associated with clans or organizations, the possibility to search a tartan by name seems to make sense.

We built tartanify.com as a side project where we feel absolutely free to experiment with things. We didn’t want a classic search results page but an instant search “widget.” Often, a given search keyword corresponds with a number of results — for example, “Ramsay” comes in six variations. We imagined the search widget would be persistent, meaning it should stay in place when a user navigates from one matching tartan to another.

Let me show you how we made it work with Lunr. The first step of building the index is very similar to the gatsby-starter-blog example, only simpler:

/* gatsby-node.js */ exports.createResolvers = ({ cache, createResolvers }) => { createResolvers({ Query: { LunrIndex: { type: GraphQLJSONObject, resolve(source, args, context) { const siteNodes = context.nodeModel.getAllNodes({ type: `TartansCsv`, }) return createIndex(siteNodes, cache) }, }, }, }) } const createIndex = async (nodes, cache) => { const cacheKey = `LunrIndex` const cached = await cache.get(cacheKey) if (cached) { return cached } const store = {} const index = lunr(function() { this.ref(`slug`) this.field(`title`) for (node of nodes) { const { slug } = node.fields const doc = { slug, title: node.fields.Unique_Name, } store[slug] = { title: doc.title, } this.add(doc) } }) const json = { index: index.toJSON(), store } cache.set(cacheKey, json) return json }

We opted for instant search, which means that search is triggered by any change in the search input instead of a form submission.

/* src/components/searchwidget.js */ import React, { useState } from "react" import lunr, { Index } from "lunr" import { graphql, useStaticQuery } from "gatsby" import SearchResults from "./searchresults"

const SearchWidget = () => { const [value, setValue] = useState("") // results is now a state variable const [results, setResults] = useState([])

// Since it's not a page component, useStaticQuery for quering data // https://www.gatsbyjs.org/docs/use-static-query/ const { LunrIndex } = useStaticQuery(graphql` query { LunrIndex } `) const index = Index.load(LunrIndex.index) const { store } = LunrIndex const handleChange = e => { const query = e.target.value setValue(query) try { const search = index.search(query).map(({ ref }) => { return { slug: ref, ...store[ref], } }) setResults(search) } catch (error) { console.log(error) } } return ( <div className="search-wrapper"> // You can use a form tag as well, as long as we prevent the default submit behavior <div role="search"> <label htmlFor="search-input" className="visually-hidden"> Search Tartans by Name </label> <input id="search-input" type="search" value={value} onChange={handleChange} placeholder="Search Tartans by Name" /> </div> <SearchResults results={results} /> </div> ) } export default SearchWidget

The SearchResults are structured like this:

/* src/components/searchresults.js */ import React from "react" import { Link } from "gatsby" const SearchResults = ({ results }) => ( <div> {results.length ? ( <> <h2>{results.length} tartan(s) matched your query</h2> <ul> {results.map(result => ( <li key={result.slug}> <Link to={`/tartan/${result.slug}`}>{result.title}</Link> </li> ))} </ul> </> ) : ( <p>Sorry, no matches found.</p> )} </div> ) export default SearchResults

Making it persistent

Where should we use this component? We could add it to the Layout component. The problem is that our search form will get unmounted on page changes that way. If a user wants to browser all tartans associated with the “Ramsay” clan, they will have to retype their query several times. That’s not ideal.

Thomas Weibenfalk has written a great article on keeping state between pages with local state in Gatsby.js. We will use the same technique, where the wrapPageElement browser API sets persistent UI elements around pages.

Let’s add the following code to the gatsby-browser.js. You might need to add this file to the root of your project.

/* gatsby-browser.js */ import React from "react" import SearchWrapper from "./src/components/searchwrapper" export const wrapPageElement = ({ element, props }) => ( <SearchWrapper {...props}>{element}</SearchWrapper> )

Now let’s add a new component file:

touch src/components/searchwrapper.js

Instead of adding SearchWidget component to the Layout, we will add it to the SearchWrapper and the magic happens. ✨

/* src/components/searchwrapper.js */ import React from "react" import SearchWidget from "./searchwidget"

const SearchWrapper = ({ children }) => ( <> {children} <SearchWidget /> </> ) export default SearchWrapper

Creating a custom search query

At this point, I started to try different keywords but very quickly realized that Lunr’s default search query might not be the best solution when used for instant search.

Why? Imagine that we are looking for tartans associated with the name MacCallum. While typing “MacCallum” letter-by-letter, this is the evolution of the results:

m – 2 matches (Lyon, Jeffrey M, Lyon, Jeffrey M (Hunting))

ma – no matches

mac – 1 match (Brighton Mac Dermotte)

macc – no matches

macca – no matches

maccal – 1 match (MacCall)

maccall – 1 match (MacCall)

maccallu – no matches

maccallum – 3 matches (MacCallum, MacCallum #2, MacCallum of Berwick)

Users will probably type the full name and hit the button if we make a button available. But with instant search, a user is likely to abandon early because they may expect that the results can only narrow down letters are added to the keyword query.

That’s not the only problem. Here’s what we get with “Callum”:

c – 3 unrelated matches

ca – no matches

cal – no matches

call – no matches

callu – no matches

callum – one match

You can see the trouble if someone gives up halfway into typing the full query.

Fortunately, Lunr supports more complex queries, including fuzzy matches, wildcards and boolean logic (e.g. AND, OR, NOT) for multiple terms. All of these are available either via a special query syntax, for example:

index.search("+*callum mac*")

We could also reach for the index query method to handle it programatically.

The first solution is not satisfying since it requires more effort from the user. I used the index.query method instead:

/* src/components/searchwidget.js */ const search = index .query(function(q) { // full term matching q.term(el) // OR (default) // trailing or leading wildcard q.term(el, { wildcard: lunr.Query.wildcard.LEADING | lunr.Query.wildcard.TRAILING, }) }) .map(({ ref }) => { return { slug: ref, ...store[ref], } })

Why use full term matching with wildcard matching? That’s necessary for all keywords that “benefit” from the stemming process. For example, the stem of “different” is “differ.” As a consequence, queries with wildcards — such as differe*, differen* or different* — all result in no matches, while the full term queries differe, differen and different return matches.

Fuzzy matches can be used as well. In our case, they are allowed uniquely for terms of five or more characters:

q.term(el, { editDistance: el.length > 5 ? 1 : 0 }) q.term(el, { wildcard: lunr.Query.wildcard.LEADING | lunr.Query.wildcard.TRAILING, })

The handleChange function also “cleans up” user inputs and ignores single-character terms:

/* src/components/searchwidget.js */ const handleChange = e => { const query = e.target.value || "" setValue(query) if (!query.length) { setResults([]) } const keywords = query .trim() // remove trailing and leading spaces .replace(/\*/g, "") // remove user's wildcards .toLowerCase() .split(/\s+/) // split by whitespaces // do nothing if the last typed keyword is shorter than 2 if (keywords[keywords.length - 1].length < 2) { return } try { const search = index .query(function(q) { keywords // filter out keywords shorter than 2 .filter(el => el.length > 1) // loop over keywords .forEach(el => { q.term(el, { editDistance: el.length > 5 ? 1 : 0 }) q.term(el, { wildcard: lunr.Query.wildcard.LEADING | lunr.Query.wildcard.TRAILING, }) }) }) .map(({ ref }) => { return { slug: ref, ...store[ref], } }) setResults(search) } catch (error) { console.log(error) } }

Let’s check it in action:

m – pending

ma – 861 matches

mac – 600 matches

macc – 35 matches

macca – 12 matches

maccal – 9 matches

maccall – 9 matches

maccallu – 3 matches

maccallum – 3 matches

Searching for “Callum” works as well, resulting in four matches: Callum, MacCallum, MacCallum #2, and MacCallum of Berwick.

There is one more problem, though: multi-terms queries. Say, you’re looking for “Loch Ness.” There are two tartans associated with that term, but with the default OR logic, you get a grand total of 96 results. (There are plenty of other lakes in Scotland.)

I wound up deciding that an AND search would work better for this project. Unfortunately, Lunr does not support nested queries, and what we actually need is (keyword1 OR *keyword*) AND (keyword2 OR *keyword2*).

To overcome this, I ended up moving the terms loop outside the query method and intersecting the results per term. (By intersecting, I mean finding all slugs that appear in all of the per-single-keyword results.)

/* src/components/searchwidget.js */ try { // andSearch stores the intersection of all per-term results let andSearch = [] keywords .filter(el => el.length > 1) // loop over keywords .forEach((el, i) => { // per-single-keyword results const keywordSearch = index .query(function(q) { q.term(el, { editDistance: el.length > 5 ? 1 : 0 }) q.term(el, { wildcard: lunr.Query.wildcard.LEADING | lunr.Query.wildcard.TRAILING, }) }) .map(({ ref }) => { return { slug: ref, ...store[ref], } }) // intersect current keywordSearch with andSearch andSearch = i > 0 ? andSearch.filter(x => keywordSearch.some(el => el.slug === x.slug)) : keywordSearch }) setResults(andSearch) } catch (error) { console.log(error) }

The source code for tartanify.com is published on GitHub. You can see the complete implementation of the Lunr search there.

Final thoughts

Search is often a non-negotiable feature for finding content on a site. How important the search functionality actually is may vary from one project to another. Nevertheless, there is no reason to abandon it under the pretext that it does not tally with the static character of Jamstack websites. There are many possibilities. We’ve just discussed one of them.

And, paradoxically in this specific example, the result was a better all-around user experience, thanks to the fact that implementing search was not an obvious task but instead required a lot of deliberation. We may not have been able to say the same with an over-the-counter solution.

The post How to Add Lunr Search to your Gatsby Website appeared first on CSS-Tricks.

How to Add Lunr Search to your Gatsby Website published first on https://deskbysnafu.tumblr.com/

0 notes

Text

How to Add Lunr Search to your Gatsby Website

The Jamstack way of thinking and building websites is becoming more and more popular.

Have you already tried Gatsby, Nuxt, or Gridsome (to cite only a few)? Chances are that your first contact was a “Wow!” moment — so many things are automatically set up and ready to use.

There are some challenges, though, one of which is search functionality. If you’re working on any sort of content-driven site, you’ll likely run into search and how to handle it. Can it be done without any external server-side technology?

Search is not one of those things that come out of the box with Jamstack. Some extra decisions and implementation are required.

Fortunately, we have a bunch of options that might be more or less adapted to a project. We could use Algolia’s powerful search-as-service API. It comes with a free plan that is restricted to non-commercial projects with a limited capacity. If we were to use WordPress with WPGraphQL as a data source, we could take advantage of WordPress native search functionality and Apollo Client. Raymond Camden recently explored a few Jamstack search options, including pointing a search form directly at Google.

In this article, we will build a search index and add search functionality to a Gatsby website with Lunr, a lightweight JavaScript library providing an extensible and customizable search without the need for external, server-side services. We used it recently to add “Search by Tartan Name” to our Gatsby project tartanify.com. We absolutely wanted persistent search as-you-type functionality, which brought some extra challenges. But that’s what makes it interesting, right? I’ll discuss some of the difficulties we faced and how we dealt with them in the second half of this article.

Getting started

For the sake of simplicity, let’s use the official Gatsby blog starter. Using a generic starter lets us abstract many aspects of building a static website. If you’re following along, make sure to install and run it:

gatsby new gatsby-starter-blog https://github.com/gatsbyjs/gatsby-starter-blog cd gatsby-starter-blog gatsby develop

It’s a tiny blog with three posts we can view by opening up http://localhost:8000/___graphql in the browser.

Inverting index with Lunr.js

Lunr uses a record-level inverted index as its data structure. The inverted index stores the mapping for each word found within a website to its location (basically a set of page paths). It’s on us to decide which fields (e.g. title, content, description, etc.) provide the keys (words) for the index.

For our blog example, I decided to include all titles and the content of each article. Dealing with titles is straightforward since they are composed uniquely of words. Indexing content is a little more complex. My first try was to use the rawMarkdownBody field. Unfortunately, rawMarkdownBody introduces some unwanted keys resulting from the markdown syntax.

I obtained a “clean” index using the html field in conjunction with the striptags package (which, as the name suggests, strips out the HTML tags). Before we get into the details, let’s look into the Lunr documentation.

Here’s how we create and populate the Lunr index. We will use this snippet in a moment, specifically in our gatsby-node.js file.

const index = lunr(function () { this.ref('slug') this.field('title') this.field('content') for (const doc of documents) { this.add(doc) } })

documents is an array of objects, each with a slug, title and content property:

{ slug: '/post-slug/', title: 'Post Title', content: 'Post content with all HTML tags stripped out.' }

We will define a unique document key (the slug) and two fields (the title and content, or the key providers). Finally, we will add all of the documents, one by one.

Let’s get started.

Creating an index in gatsby-node.js

Let’s start by installing the libraries that we are going to use.

yarn add lunr graphql-type-json striptags

Next, we need to edit the gatsby-node.js file. The code from this file runs once in the process of building a site, and our aim is to add index creation to the tasks that Gatsby executes on build.

CreateResolvers is one of the Gatsby APIs controlling the GraphQL data layer. In this particular case, we will use it to create a new root field; Let’s call it LunrIndex.

Gatsby’s internal data store and query capabilities are exposed to GraphQL field resolvers on context.nodeModel. With getAllNodes, we can get all nodes of a specified type:

/* gatsby-node.js */ const { GraphQLJSONObject } = require(`graphql-type-json`) const striptags = require(`striptags`) const lunr = require(`lunr`) exports.createResolvers = ({ cache, createResolvers }) => { createResolvers({ Query: { LunrIndex: { type: GraphQLJSONObject, resolve: (source, args, context, info) => { const blogNodes = context.nodeModel.getAllNodes({ type: `MarkdownRemark`, }) const type = info.schema.getType(`MarkdownRemark`) return createIndex(blogNodes, type, cache) }, }, }, }) }

Now let’s focus on the createIndex function. That’s where we will use the Lunr snippet we mentioned in the last section.

/* gatsby-node.js */ const createIndex = async (blogNodes, type, cache) => { const documents = [] // Iterate over all posts for (const node of blogNodes) { const html = await type.getFields().html.resolve(node) // Once html is resolved, add a slug-title-content object to the documents array documents.push({ slug: node.fields.slug, title: node.frontmatter.title, content: striptags(html), }) } const index = lunr(function() { this.ref(`slug`) this.field(`title`) this.field(`content`) for (const doc of documents) { this.add(doc) } }) return index.toJSON() }

Have you noticed that instead of accessing the HTML element directly with const html = node.html, we’re using an await expression? That’s because node.html isn’t available yet. The gatsby-transformer-remark plugin (used by our starter to parse Markdown files) does not generate HTML from markdown immediately when creating the MarkdownRemark nodes. Instead, html is generated lazily when the html field resolver is called in a query. The same actually applies to the excerpt that we will need in just a bit.

Let’s look ahead and think about how we are going to display search results. Users expect to obtain a link to the matching post, with its title as the anchor text. Very likely, they wouldn’t mind a short excerpt as well.

Lunr’s search returns an array of objects representing matching documents by the ref property (which is the unique document key slug in our example). This array does not contain the document title nor the content. Therefore, we need to store somewhere the post title and excerpt corresponding to each slug. We can do that within our LunrIndex as below:

/* gatsby-node.js */ const createIndex = async (blogNodes, type, cache) => { const documents = [] const store = {} for (const node of blogNodes) { const {slug} = node.fields const title = node.frontmatter.title const [html, excerpt] = await Promise.all([ type.getFields().html.resolve(node), type.getFields().excerpt.resolve(node, { pruneLength: 40 }), ]) documents.push({ // unchanged }) store[slug] = { title, excerpt, } } const index = lunr(function() { // unchanged }) return { index: index.toJSON(), store } }

Our search index changes only if one of the posts is modified or a new post is added. We don’t need to rebuild the index each time we run gatsby develop. To avoid unnecessary builds, let’s take advantage of the cache API:

/* gatsby-node.js */ const createIndex = async (blogNodes, type, cache) => { const cacheKey = `IndexLunr` const cached = await cache.get(cacheKey) if (cached) { return cached } // unchanged const json = { index: index.toJSON(), store } await cache.set(cacheKey, json) return json }

Enhancing pages with the search form component

We can now move on to the front end of our implementation. Let’s start by building a search form component.

touch src/components/search-form.js

I opt for a straightforward solution: an input of type="search", coupled with a label and accompanied by a submit button, all wrapped within a form tag with the search landmark role.

We will add two event handlers, handleSubmit on form submit and handleChange on changes to the search input.

/* src/components/search-form.js */ import React, { useState, useRef } from "react" import { navigate } from "@reach/router" const SearchForm = ({ initialQuery = "" }) => { // Create a piece of state, and initialize it to initialQuery // query will hold the current value of the state, // and setQuery will let us change it const [query, setQuery] = useState(initialQuery) // We need to get reference to the search input element const inputEl = useRef(null) // On input change use the current value of the input field (e.target.value) // to update the state's query value const handleChange = e => { setQuery(e.target.value) } // When the form is submitted navigate to /search // with a query q paramenter equal to the value within the input search const handleSubmit = e => { e.preventDefault() // `inputEl.current` points to the mounted search input element const q = inputEl.current.value navigate(`/search?q=${q}`) } return ( <form role="search" onSubmit={handleSubmit}> <label htmlFor="search-input" style=> Search for: </label> <input ref={inputEl} id="search-input" type="search" value={query} placeholder="e.g. duck" onChange={handleChange} /> <button type="submit">Go</button> </form> ) } export default SearchForm

Have you noticed that we’re importing navigate from the @reach/router package? That is necessary since neither Gatsby’s <Link/> nor navigate provide in-route navigation with a query parameter. Instead, we can import @reach/router — there’s no need to install it since Gatsby already includes it — and use its navigate function.

Now that we’ve built our component, let’s add it to our home page (as below) and 404 page.

/* src/pages/index.js */ // unchanged import SearchForm from "../components/search-form" const BlogIndex = ({ data, location }) => { // unchanged return ( <Layout location={location} title={siteTitle}> <SEO title="All posts" /> <Bio /> <SearchForm /> // unchanged

Search results page

Our SearchForm component navigates to the /search route when the form is submitted, but for the moment, there is nothing behing this URL. That means we need to add a new page:

touch src/pages/search.js

I proceeded by copying and adapting the content of the the index.js page. One of the essential modifications concerns the page query (see the very bottom of the file). We will replace allMarkdownRemark with the LunrIndex field.

/* src/pages/search.js */ import React from "react" import { Link, graphql } from "gatsby" import { Index } from "lunr" import Layout from "../components/layout" import SEO from "../components/seo" import SearchForm from "../components/search-form"

// We can access the results of the page GraphQL query via the data props const SearchPage = ({ data, location }) => { const siteTitle = data.site.siteMetadata.title // We can read what follows the ?q= here // URLSearchParams provides a native way to get URL params // location.search.slice(1) gets rid of the "?" const params = new URLSearchParams(location.search.slice(1)) const q = params.get("q") || ""

// LunrIndex is available via page query const { store } = data.LunrIndex // Lunr in action here const index = Index.load(data.LunrIndex.index) let results = [] try { // Search is a lunr method results = index.search(q).map(({ ref }) => { // Map search results to an array of {slug, title, excerpt} objects return { slug: ref, ...store[ref], } }) } catch (error) { console.log(error) } return ( // We will take care of this part in a moment ) } export default SearchPage export const pageQuery = graphql` query { site { siteMetadata { title } } LunrIndex } `

Now that we know how to retrieve the query value and the matching posts, let’s display the content of the page. Notice that on the search page we pass the query value to the <SearchForm /> component via the initialQuery props. When the user arrives to the search results page, their search query should remain in the input field.

return ( <Layout location={location} title={siteTitle}> <SEO title="Search results" /> {q ? <h1>Search results</h1> : <h1>What are you looking for?</h1>} <SearchForm initialQuery={q} /> {results.length ? ( results.map(result => { return ( <article key={result.slug}> <h2> <Link to={result.slug}> {result.title || result.slug} </Link> </h2> <p>{result.excerpt}</p> </article> ) }) ) : ( <p>Nothing found.</p> )} </Layout> )

You can find the complete code in this gatsby-starter-blog fork and the live demo deployed on Netlify.

Instant search widget

Finding the most “logical” and user-friendly way of implementing search may be a challenge in and of itself. Let’s now switch to the real-life example of tartanify.com — a Gatsby-powered website gathering 5,000+ tartan patterns. Since tartans are often associated with clans or organizations, the possibility to search a tartan by name seems to make sense.

We built tartanify.com as a side project where we feel absolutely free to experiment with things. We didn’t want a classic search results page but an instant search “widget.” Often, a given search keyword corresponds with a number of results — for example, “Ramsay” comes in six variations. We imagined the search widget would be persistent, meaning it should stay in place when a user navigates from one matching tartan to another.

Let me show you how we made it work with Lunr. The first step of building the index is very similar to the gatsby-starter-blog example, only simpler:

/* gatsby-node.js */ exports.createResolvers = ({ cache, createResolvers }) => { createResolvers({ Query: { LunrIndex: { type: GraphQLJSONObject, resolve(source, args, context) { const siteNodes = context.nodeModel.getAllNodes({ type: `TartansCsv`, }) return createIndex(siteNodes, cache) }, }, }, }) } const createIndex = async (nodes, cache) => { const cacheKey = `LunrIndex` const cached = await cache.get(cacheKey) if (cached) { return cached } const store = {} const index = lunr(function() { this.ref(`slug`) this.field(`title`) for (node of nodes) { const { slug } = node.fields const doc = { slug, title: node.fields.Unique_Name, } store[slug] = { title: doc.title, } this.add(doc) } }) const json = { index: index.toJSON(), store } cache.set(cacheKey, json) return json }

We opted for instant search, which means that search is triggered by any change in the search input instead of a form submission.

/* src/components/searchwidget.js */ import React, { useState } from "react" import lunr, { Index } from "lunr" import { graphql, useStaticQuery } from "gatsby" import SearchResults from "./searchresults"

const SearchWidget = () => { const [value, setValue] = useState("") // results is now a state variable const [results, setResults] = useState([])

// Since it's not a page component, useStaticQuery for quering data // https://www.gatsbyjs.org/docs/use-static-query/ const { LunrIndex } = useStaticQuery(graphql` query { LunrIndex } `) const index = Index.load(LunrIndex.index) const { store } = LunrIndex const handleChange = e => { const query = e.target.value setValue(query) try { const search = index.search(query).map(({ ref }) => { return { slug: ref, ...store[ref], } }) setResults(search) } catch (error) { console.log(error) } } return ( <div className="search-wrapper"> // You can use a form tag as well, as long as we prevent the default submit behavior <div role="search"> <label htmlFor="search-input" className="visually-hidden"> Search Tartans by Name </label> <input id="search-input" type="search" value={value} onChange={handleChange} placeholder="Search Tartans by Name" /> </div> <SearchResults results={results} /> </div> ) } export default SearchWidget

The SearchResults are structured like this:

/* src/components/searchresults.js */ import React from "react" import { Link } from "gatsby" const SearchResults = ({ results }) => ( <div> {results.length ? ( <> <h2>{results.length} tartan(s) matched your query</h2> <ul> {results.map(result => ( <li key={result.slug}> <Link to={`/tartan/${result.slug}`}>{result.title}</Link> </li> ))} </ul> </> ) : ( <p>Sorry, no matches found.</p> )} </div> ) export default SearchResults

Making it persistent

Where should we use this component? We could add it to the Layout component. The problem is that our search form will get unmounted on page changes that way. If a user wants to browser all tartans associated with the “Ramsay” clan, they will have to retype their query several times. That’s not ideal.

Thomas Weibenfalk has written a great article on keeping state between pages with local state in Gatsby.js. We will use the same technique, where the wrapPageElement browser API sets persistent UI elements around pages.

Let’s add the following code to the gatsby-browser.js. You might need to add this file to the root of your project.

/* gatsby-browser.js */ import React from "react" import SearchWrapper from "./src/components/searchwrapper" export const wrapPageElement = ({ element, props }) => ( <SearchWrapper {...props}>{element}</SearchWrapper> )

Now let’s add a new component file:

touch src/components/searchwrapper.js

Instead of adding SearchWidget component to the Layout, we will add it to the SearchWrapper and the magic happens.

/* src/components/searchwrapper.js */ import React from "react" import SearchWidget from "./searchwidget"

const SearchWrapper = ({ children }) => ( <> {children} <SearchWidget /> </> ) export default SearchWrapper

Creating a custom search query

At this point, I started to try different keywords but very quickly realized that Lunr’s default search query might not be the best solution when used for instant search.

Why? Imagine that we are looking for tartans associated with the name MacCallum. While typing “MacCallum” letter-by-letter, this is the evolution of the results: